Flower Recognition

Oxford Flowers 102 [23] has 8189 images divided into 102 categories with 40 to 250 images per category. In the figure below, we show some examples from five categories with each column one category. It has been proved that classification with segmentation will boost the final performance. Instead of focusing on segmentation, we use Grabcut to segment one flower instance from each image. It has been suggested in color information could boost the recognition performance. We resize the foreground images to minimum resolution 128 and extract color PRI-CoLBPg feature on the foreground region.State-of-the-arts Methods: The work of [23] is widely recognized as the state-of-the-art method. In [23], Nilsback et al. proposed to apply multiple kernel learning (MKL) to fuse four features. Using the same features, instead of using MKL, Yuan et al. [42] proposed to use multi-task joint sparse representation for feature combination. In [4], Ito et al. proposed several co-occurrence features and apply them flower recognition, but unfortunately, the performance is not satisfactory. In [5], Kanan et al. propose to combine the saliency map and naive bayes nearest neighbor (NBNN). Instead of using SIFT features, they use a filter-based feature which is like the GIST. Different with the SIFT feature, this kind of filter-based feature captures more spatial layout. Meanwhile, the descriptive region is obviously larger than traditional local features. Recently, Chai et al. [27] reports better result on flower recognition. In their paper, they mainly focus on improving the segmentation. Meanwhile, they modified the four featured used in [23]. They apply Multi Scale Dense Sampling (MSDS) SIFT instead of single scale dense sampling and use Locality-constrained linear coding (LLC) for feature encoding instead of hard assignment. Finally, they use AKA and linear SVM classifier.

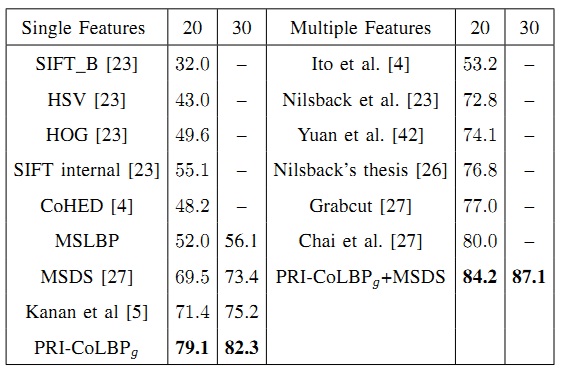

We use the same training and testing methods like [27] and use 20 or 30 samples for training and the rest for test. We compare the PRI-CoLBPg with some features and evaluate some feature combinations. The experimental results have been shown below. All the results are originally reported except for MSLBP, COHED and MSDS. For MSLBP, we extract three channels' features, therefore, the dimension of MSLBP is 54*3=162. Meanwhile, We reimplement COHED according to [4]. According to [27], we reimplement the bag of MSDS and get performance 69.5%, which is near to their result 70.0%.

Experimental Analysis:

From the table, for single feature, PRI-CoLBPg outperforms MSLBP for 27% and outperforms another co-occurrence feature (COHED) for more than 3%. In addition, PRI-CoLBPg also outperforms nowadays best two single feature methods. Specifically, it improves MSDS for about 10\% and the work [5] for 7.7%.

For multiple features combinational methods, PRI-CoLBPg combined with MSDS achieves 84.2%, which greatly exceeds the work [27] 80.0% which combines four types of features. Meanwhile, PRI-CoLBPg combined with MSDS obviously overcomes each of them. It means that PRI-CoLBPg is complementary with the MSDS.

There are several reasons for PRI-CoLBPg achieving high performance on flower recognition. Firstly, flower recognition is highly related to texture classification task, and PRI-CoLBPg is effective on capturing texture information. Secondly, color information is effective for flower recognition, and fortunately, color information can easily be encoded to PRI-CoLBPg through extracting PRI-CoLBPg on three channels. Finally, there exists strong shape structures on the flower images, as we have described in the paper, PRI-CoLBPg pays more weight on the shape region and is more effective on capturing shape information.

Among the 102 categories, there exist several categories with low recognition accuracy, where rose is a typical category. The low recognition accuracy derives from the following three reasons. Firstly, the viewpoint varies a lot. Part of roses are frontal, some of them are overleaf, and a lot of them are lateral. Secondly, the rose category owns multiple colors. It almost covers 10 colors. Finally, even for the same frontal images, the shape varies a lot. Meanwhile, we can find that there are several pairs which are easily mixed up with each other, such as lotus and water lily.

Acknowledgments

We want to thank Andrew Zisserman's group for some valuable discussion. The work is firstly motivated by working on flower recognition project. Their works on flower segmentation and recognition are great.References

[4] S. Ito and S. Kubota, “Object classification using heterogeneous co-occurrence features,” ECCV, 2010.[5] C. Kanan and G. Cottrell, “Robust classification of objects, faces, and flowers using natural image statistics,” CVPR, 2010.

[23] M. Nilsback and A. Zisserman, “Automated flower classification over a large number of classes,” ICVGIP, 2008.

[26] M. Nilsback, “An automatic visual flora - segmentation and classification of flowers images,” in PhD thesis, University of Oxford.

[27] Y. Chai, V. Lempitsky, and A. Zisserman, “Bicos: A bi-level co-segmentation method for image classification,” ICCV, 2011.

[42] X. Yuan and S. Yan, “Visual classification with multi-task joint sparse representation,” CVPR, 2010.