Texture Classification

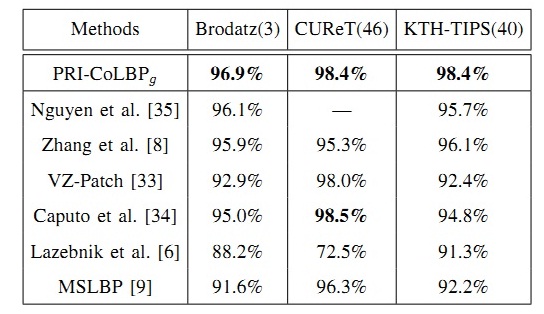

Brodatz texture album is a well-known benchmark dataset. It contains 111 texture classes, with each class 9 images. For our experiments, we use 3 training samples and the rest for testing. CUReT texture dataset is a widely used texture dataset. We use the same subset of images as [33, 34], which contains 61 texture classes with 92 images for each class. These images are captured under different illuminations with seven different viewpoint directions. We use 46 training images per class and the rest for test . KTH-TIPS dataset consists of 10 texture classes. Images are captured at nine scales, viewed under three different illumination directions and three different poses with 81 images per class. Follow the standard setup, we use 40 images for training per class and the rest for testing. State-of-the-arts Methods: In [6], Lazebnik et al. proposed to use Harris-affine corners and Laplacian-affine blobs to detect interest point and use SPIN and RIFT as descriptors for texture classification. Nearest neighbor(NN) classifier was use for classification. Instead of using NN classifier, Caputo et al. [34] use kernel SVM classifier and prove that SVM classifier achieves better performance than nearest neighbor classifier on texture classification problem. The method of Zhang et al. [8] is widely accepted as the state-of-the-art methods, they evaluate the features and kernels for object and texture classification. In [35], Nguyen et al. propose to use multivariate log-gaussian cox processes for texture classification. The experimental results have been shown below. All results are originally reported except the result of multi-scale LBP (MSLBP) which is based on the standard implement at http://www.cse.oulu.fi/MVG/Downloads using one-vs-the-rest chi_square kernel SVM. According to our experimental observation, one-vs-the-rest strategy outperforms one-vs-one strategy.

Experimental Analysis:

From the table, on all three data sets, PRI-CoLBPg significantly outperforms MSLBP. Meanwhile, PRI-CoLBPg obviously exceeds the performance of [8] and [35] on all the three datasets. Compared with [8] and [35], the proposed feature reduces the average error rate from about 4% to 2% which is equivalent to reduce the errors by 50%. The reason why PRI-CoLBPg greatly outperforms MSLBP is that PRI-CoLBPg captures spatial context co-occurrence and preserves relative orientation angle information, and the spatial context and relative orientation information are greatly discriminative, whereas multi-scale LBP ignores spatial information. Although KTH-TIPS contains scale variance (9 scales and 81 images per class), the scale changes continually from 0.5 to 2 and the variation is relatively small. Thus, PRI-CoLBPg works well on this data set and obtains an accuracy of 98.4% performance, which significantly outperforms the best result 96.1%. The experimental result on KTH-TIPS could indirectly reflect that PRI-CoLBPg is not sensitive to small scale variance.

References

[6] S. Lazebnik, C. Schmid, and J. Ponce, “A sparse texture representation using local affine regions,” PAMI, 2005.

[8] J. Zhang, M. Marszalek, S. Lazebnik, and C. Schmid, “Local features and kernels for classification of texture and object categories: A comprehensive study,” CVPR, 2007.

[9] T. Ojala, M. Pietik¨ ainen, and T. M¨ aenp¨ a¨ a, “Multiresolution gray-scale and rotation invariant texture classification with local binary patterns,” PAMI, 2002.

[33] M. Varma and A. Zisserman, “A statistical approach to material classification using image patch exemplars,” PAMI, 2008.

[34] B. Caputo, E. Hayman, M. Fritz, and J. Eklundh, “Classifying materials in the real world,” Image and Vision Computing, 2010. [35] H. Nguyen, R. Fablet, and J. Boucher, “Visual textures as realizations of multivariate log-gaussian cox processes,” CVPR, 2011.